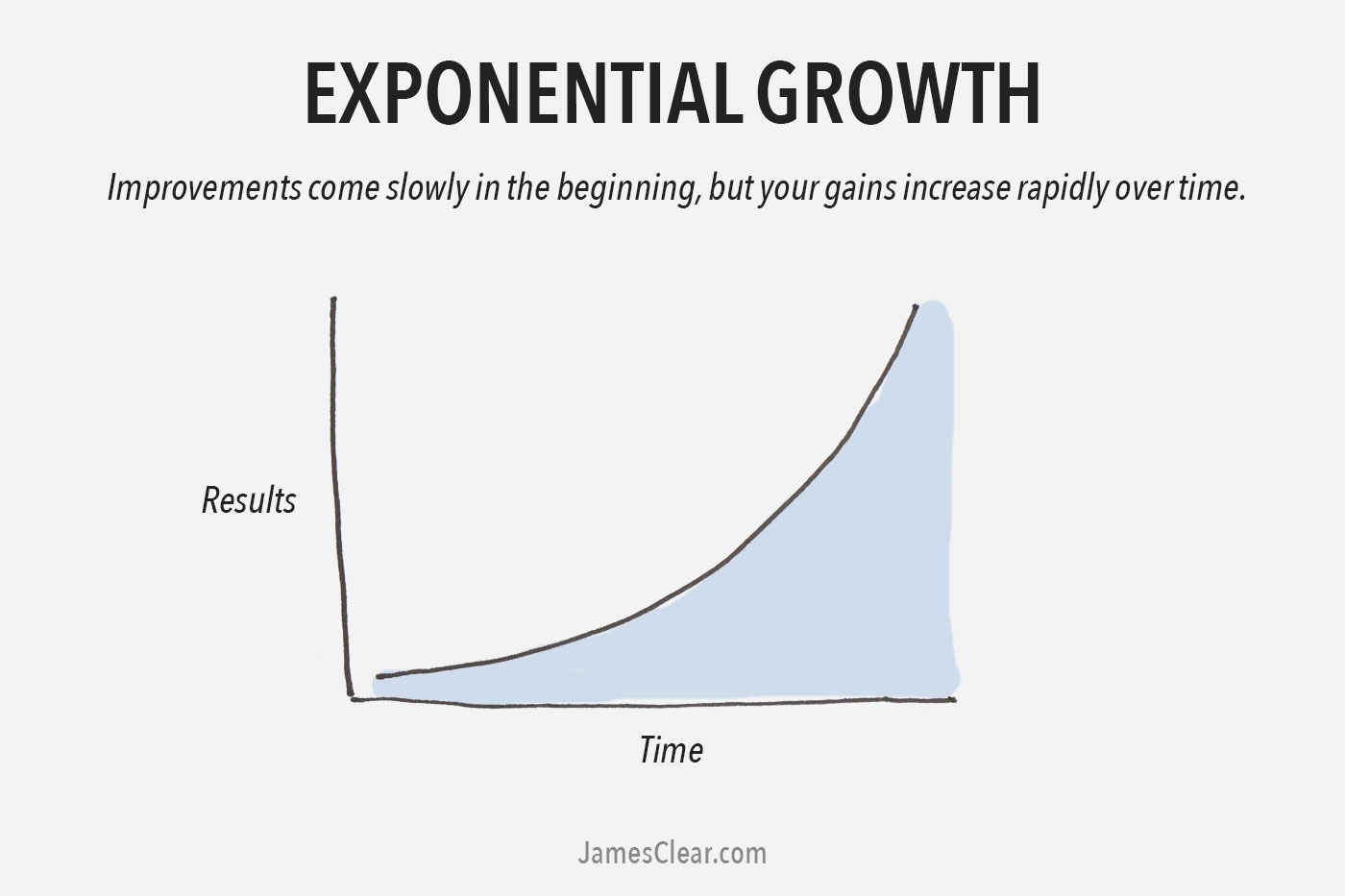

Between 2017 to 2022, I frequently presented to various audiences my perspective on AI as an exponentially growing technology.

Most people struggle with grasping exponential growth due to its non-linear nature, which conflicts with our innate linear thinking.

We assume that what’s true now will more or less remain true 3, 4, or 5 years ahead.

To elucidate this concept, I’d like to recount a story about the invention of chess, which, though its authenticity as the game's origin is uncertain, effectively illustrates exponential growth.

A wise man invents a game of chess and presents it to his king.

The king, impressed by the game, asks the wise man what he would like as a reward. The wise man, appearing modest, asks for a seemingly simple reward: a grain of rice on the first square of the chessboard, two grains on the second, four on the third, and so on, doubling the amount on each subsequent square for all 64 squares of the chessboard.

The king, underestimating the power of exponential growth, quickly agrees, only to discover that the total amount of rice required is far more than anticipated. By the time you reach the 64th square, the number of grains is 2^63 - over 18 quintillion grains - a quantity larger than the world’s rice production.

Our intuition is often ill-equipped to grasp the true nature of exponential growth. What starts as a small number quickly becomes unimaginably large.

In the context of AI, exponential growth represents how rapid advancements in technology, particularly those that build upon previous developments, can lead to an exponential increase in capabilities and impact. Just like the grains of rice, advancements in AI algorithms, computing power, and human capital might seem small at each step but accumulate to a massive scale over time.

When I speak to audiences today, I highlight with a sense of satisfaction that AI has finally had its ‘exponential moment’ - meaning, it has begun its exponential growth phase in the past year. This doesn't imply that AI has reached its peak; rather, it's the beginning of a rapid acceleration. This means we can expect AI to advance significantly in capabilities and applications in the coming years. The term 'exponential moment' here refers to the onset of a period where advancements in AI will increasingly occur at a faster rate, enhancing both its sophistication and practical utility.

The holy grail or pivotal point may feel like the moment that OpenAI released ChatGPT, but in reality, it has been a series of cumulative developments across three domains that led to the growth that we witnessed at the end of 2022 and throughout 2023.

This exponential growth in AI, particularly evident in 2023, is the culmination of cumulative developments across three key areas: algorithmic improvements, processing capabilities, and a surge in research and human capital investment.

First, let’s talk about improvements in AI algorithms

I find Howard Gardner's theory of multiple intelligences useful for categorizing advancements in AI. This helps me compartmentalize and consider what aspects of intelligence we are able to co-create in machines.

According to Gardner, amongst humans, there are different ways of being intelligent. A person can have varying degrees of ability across the nine types of intelligences, for example, they can be exceptional logical-mathematical intelligence but lacking in interpersonal intelligence.

I have mapped the nine types of intelligences below to advances and breakthrough moments in AI specifically from 2023.

This theory suggests that humans possess different types of intelligence, and if AI is to achieve a human-like general intelligence, it must exhibit capabilities across all these domains. In 2023, AI has made significant strides in several of these intelligences.

Visual-spatial, the ability to read maps, charts, videos and pictures

In 2023, generative AI made significant strides in creating imagery. Text-to-image models like OpenAI's DALL-E 2, Stability AI's Stable Diffusion, and Google's Imagen have shown remarkable abilities to generate stunning visuals from textual descriptions. DALL-E 2 marked a significant improvement in the quality and resolution of generated images.One of the key technical advancements was the introduction of a new technique called "unCLIP," which allows for more accurate alignment between text inputs and generated images. This development enhanced the model's ability to understand and interpret complex textual descriptions, translating them into visually coherent and contextually relevant images.

Stable Diffusion is noteworthy for its democratization of text-to-image models, making high-quality image generation accessible on standard consumer hardware. This was achieved through advancements in the efficiency of the model, allowing it to operate without requiring high-end GPUs. The model's architecture enables quick and high-fidelity image generation, making it a significant tool for creative applications.Linguistic-verbal, the ability to use words well, both when writing and speaking

This one is obvious. Large Language Models are excellent at… well… language.

One study last year explored the causal impact of GenAI ideas on the production of an unstructured creative output in an online experimental study where some writers could obtain ideas for a story from a GenAI platform. Researchers compared how a human performs at writing short stories alone compared to human+AI stories. They found that stories written by human+GenAI were better among less creative writers, meaning that humans who are already highly creative may not wean as much value from the AI. The takeway for me is that GenAI can impressively produce novel story ideas within minutes. It’s also helpful to remember that these models are the worst they will ever be, and since the progression of these models is likely on an exponential trend, it will only become better over time.

Some other notable advancements were:Enhanced Model Alignment and Reduced Error Rates: GPT-4, has been designed to enhance model alignment, which means it generates less offensive or dangerous outputs and shows improved factual correctness.

Compared to its predecessor, GPT-4 demonstrates a 40% reduction in factual and reasoning errors. This advancement indicates a significant leap in AI's ability to process and understand human language more accurately and safely.Performance on Professional and Academic Benchmarks: OpenAI tested GPT-4 on a diverse set of benchmarks, including academic and professional exams originally designed for humans. GPT-4 exhibited human-level performance on these benchmarks, which included exams like the Uniform Bar Examination, LSAT, and SAT. The full paper on the topic can be accessed here.

This achievement highlights GPT-4's enhanced capabilities in understanding and processing complex language-based tasks. The achievement of OpenAI's GPT-4 in passing the Uniform Bar Examination (UBE) is indeed a significant milestone, but it's crucial to understand the context and methodology behind this accomplishment.

It wasn’t a straightforward case of the AI simply taking the test like a human candidate. The process involved careful and specific prompting, and the test conditions were simulated rather than administered in a standard testing environment.The AI was evaluated using exam-specific rubrics, and the tests included both multiple-choice and free-response questions. For the evaluation, separate prompts were designed for each question format, and images were included in the input for questions that required visual information. It's important to note that this type of controlled testing environment is quite different from the conditions under which human candidates typically take these exams.

Moreover, the evaluation setup was based on performance on a validation set of exams, and final results were reported on held-out test exams. The overall scores were determined by combining multiple-choice and free-response question scores using publicly available methodologies for each exam. This means that the AI's performance was measured in a manner that reflects its ability to process and respond to the types of questions typically found on these exams, rather than its ability to navigate the complexities of a live testing situation.

These details highlight the nuanced and controlled manner in which GPT-4's capabilities were evaluated. While the results are impressive and indicative of the AI's advanced linguistic-verbal intelligence, they should be contextualized within the specific testing methodology employed.Multilingual Capabilities: GPT-4's multilingual capabilities were also tested using Azure Translate, showcasing its proficiency in various languages. It was found to outperform the English-language performance of its predecessor, GPT-3.5, in 24 out of 26 languages tested, demonstrating its improved capabilities in linguistic diversity and understanding.

Limitations in Language Depth and Nuance: While GPT-4 shows improved performance in various languages, there may still be limitations in its understanding of the depth and nuances of each language. This includes challenges in grasping cultural contexts, idiomatic expressions, and regional dialects that are integral to languages.

Translation and Contextual Accuracy: Multilingual models often face challenges in accurately translating or maintaining the context across languages. Subtleties and nuances can be lost or misrepresented, leading to inaccuracies in translation or contextual understanding.

I would recommend checking out the full technical report here.

Logical-mathematical, the ability to think conceptually about numbers and patterns

Google DeepMind's AI tackled a complex problem last year in extremal combinatorics, specifically the cap set problem. This problem involves finding the largest possible set of vectors in a space such that no three vectors sum to zero. The AI's approach to this problem, termed "FunSearch," combined a large language model with an evolutionary algorithm. This method enabled the discovery of new constructions of large cap sets, surpassing previous known results.

This achievement demonstrates AI's advanced capabilities in logical-mathematical intelligence, particularly in abstract problem-solving and generating novel solutions to complex mathematical challenges.Bodily-kinesthetic, the ability to good at body movement, coordination, and physical control

Researchers developed a groundbreaking AI model for dance choreography called Editable Dance Generation (EDGE). EDGE is a generative AI model that can choreograph human dance animation to match any piece of music.This model represents a significant advancement in AI's bodily-kinesthetic intelligence.

EDGE's technical breakthrough lies in its unique blend of AI technologies. It uses a transformer-based diffusion model paired with Jukebox, a robust music feature extractor. This combination enables EDGE to generate dances that are not only realistic and physically plausible but also closely aligned with the rhythm and emotional content of the input music. One of the key features of EDGE is its editability, allowing for fine-tuned control over the dance movements. Users can specify certain body movements, and the AI will complete the rest of the choreography in a way that is seamless and consistent with the music.

The EDGE model is trained on a dataset consisting of high-quality dance motions paired with music from various genres. It outperformed existing state-of-the-art dance generation methods in several metrics, including human evaluations, physical plausibility, and beat alignment, a truly mind-blowing achievement for AI.Musical intelligence, the ability to compose or perform music

One of my favorite creations or advancements in AI making music is AIVA. Interestingly, AIVA was created in 2016, and its compositions are popular on YouTube. I had the pleasure of sharing the stage with the CEO of AIVA at the LEAP conference in Saudi in 2021. Turns out AIVA’s soundtracks powered the entire conference.In 2023, Google joined the bandwagon and revealed a notable advancement in musical intelligence with their AI model called MusicLM. This text-to-music generator transforms written prompts into musical compositions encompassing various styles and instruments. What sets MusicLM apart is its ability to create high-quality music based on text descriptions, extending AI's assistance in creative music tasks. The AI can extrapolate from a snippet of a melody, adjust instruments and performance quality, and even create music to fit visual art or a story. However, it's not publicly available due to potential copyright infringement concerns. This development underscores AI's evolving role in creative industries, particularly in music composition, showing an increased ability to interpret and generate complex musical compositions from textual or visual inputs

Interpersonal intelligence, the ability to assess the emotions, motivations, desires and intentions of others

Generally speaking, AI still falls short on the emotional intelligence spectrum and there haven’t been any significant advancements that demonstrates AI’s ability to truly understand and respond to human emotion. One notable release a few years ago was Ellie, an AI-powered therapist designed to monitor micro-expressions, to respond to facial cues, to perform sympathetic gestures and build rapport with patients.There was a lot of buzz last year around Replika AI, which is designed for emotional interaction and support. Replika AI, notably, has been utilized as a tool for mitigating loneliness and providing mental health support. Users have reported forming emotional connections with Replika, using it as a companion and even for therapeutic interactions. The AI behind Replika is designed to engage users in text-based conversations, evolving to include voice chats and immersive experiences. This development reflects the AI's ability to 'understand' emotions and personalities and provide human-like responses.

Replika uses a sophisticated system that combines our own Large Language Model and scripted dialogue content.

Previously Replika also used a supplementary model that was developed together with OpenAI, but switched to exclusively using their own which tends to show better results.

I’m not 100% sold on Replika, since user satisfaction dropped in the months that it was used and I suspect Luka Inc is running out of money, but it represents a shift towards using AI for human connection and interaction. At the end of the day, if an LLM can fake understanding emotions but still achieve the goal of providing support and therapeutic suggestions, that’s good enough.Intrapersonal intelligence, the ability to self-reflect and be aware of one’s own emotional states, feelings and motivations

Intrapersonal intelligence, to me, is all about consciousness. It brings to mind the famous thought experiment by philosopher Thomas Nagel, titled "What Is It Like to Be a Bat?". Nagel's experiment considers the subjective experience, or 'what it is like', to be a conscious being – in this case, a bat. He argues that if there is a subjective way to experience the world, then that entity is conscious.

The nature of consciousness, however, remains one of the most profound mysteries in both neuroscience and philosophy, often referred to as the “hard problem of consciousness” or “qualia”. Qualia are the individual instances of subjective, conscious experience – the 'what it feels like' aspect of consciousness. Despite extensive research and theorizing, the exact nature of consciousness and qualia remains elusive. The difficulty lies in explaining how and why certain physical processes in the brain give rise to subjective experiences, and why these experiences have the specific qualities they do – such as the experience of the color red or the feeling of pain.

When discussing intrapersonal intelligence in the context of AI, we venture into the realm of consciousness and the subjective experiences, an area still not fully understood and far from being replicated in artificial systems.One interesting contribution to this field is a report that argues for a rigorous and empirically grounded approach to AI consciousness, assessing AI systems in light of our best-supported neuroscientific theories of consciousness. This involves analyzing existing AI systems and their functionalities in comparison to human consciousness theories. The goal is to better understand the boundary between conscious and non-conscious processes in AI systems, which is crucial for ethical AI development (source: arXiv).

Moreover, a study published in "Frontiers in Robotics and AI" examines the concept of artificial consciousness from the perspective of creating an ethical AI system. This study suggests a Global Workspace Theory (GWT)-based AI system, which could potentially adapt to different ethical situations with varying viewpoints and experience levels. The idea here is that various unconscious processors in an AI system could carry out moral analysis of a problem from different perspectives and compete for control of a global workspace, influencing the AI's decision-making process.

These discussions and theoretical explorations indicate a growing interest and effort in understanding AI's potential for consciousness and self-awareness.

But the field is still in a largely speculative and theoretical phase, with actual implementation of self-aware or conscious AI not yet realized. These studies contribute to the ongoing dialogue on what consciousness in AI could mean and how it might be achieved, but they do not suggest that AI has reached a level of self-awareness akin to human intrapersonal intelligence.Naturalistic intelligence, the ability to be in tune with nature and nurture, explore the environment, and learn about other species

While there haven't been any major breakthroughs in 2023 specifically dedicated to environmental understanding and species identification, the ongoing advancements in AI have continued to contribute significantly to fields such as environmental monitoring. AI's role in these areas has been growing, especially in the utilization of machine learning algorithms for analyzing large sets of environmental data, identifying patterns, and aiding in research that can contribute to a better understanding of natural ecosystems.Existential intelligence, the ability to ask the “big” questions about topics such as the meaning of life and how actions can serve larger goals.

Existential intelligence is probably the most difficult to replicate in artificial intelligence. A quote by neuroscientist V.S. Ramachandran paints a picture of the mystery behind consciousness and, by expansion, existential intelligence.

How can a three-pound mass of jelly that you can hold in your palm imagine angels, contemplate the meaning of infinity, and even question its own place in the cosmos? Especially awe inspiring is the fact that any single brain, including yours, is made up of atoms that were forged in the hearts of countless, far-flung stars billions of years ago. These particles drifted for eons and light-years until gravity and change brought them together here, now. These atoms now form a conglomerate- your brain- that can not only ponder the very stars that gave it birth but can also think about its own ability to think and wonder about its own ability to wonder. With the arrival of humans, it has been said, the universe has suddenly become conscious of itself. This, truly, it the greatest mystery of allIt’s safe to say that we have not, and will not, achieve AI that has existential intelligence at any point in the future.

To me, this points to the skillsets and mindsets will be more valuable from humans, and what might already be automated by AI. Cultivating existential intelligence in future generations, for instance, might have to be the priority for school systems and curriculum developers. Similarly, critical thinking will be crucial as an increasing amount of content is produced by AI.

Second, let’s look at advances in computing power and model-efficiency.

NVIDIA unveiled new AI systems that significantly boost computational efficiency and performance. The GH200 Superchips, for example, deliver 16 petaflops of AI performance, demonstrating remarkable efficiency. One GH200 Superchip, using the NVIDIA TensorRT-LLM open-source library, is 100 times faster than a dual-socket x86 CPU system and nearly twice as energy-efficient as an X86 + H100 GPU server. These advancements in accelerated computing not only drive innovation across industries but also emphasize sustainable computing by reducing environmental impact. NVIDIA's contribution to the world's fastest supercomputers, including systems like the Eagle system using H100 GPUs, has dramatically increased computing power, reaching more than 2.5 exaflops across these systems.

Google DeepMind's efforts in 2023 also showcased significant strides in AI and computing. Their work on scaling vision transformers demonstrated state-of-the-art results in various vision tasks, extending the versatility of Transformer model architecture beyond language to domains like computer vision, audio, genomics, and more. This scaling is crucial for building more capable robots and other advanced AI applications. Additionally, DeepMind's research in algorithmic prompting and visual question answering represents a substantial move toward higher-level and multi-step reasoning, a key aspect of AI's advancement. These efforts are part of a broader trend in AI research, where the focus is on developing models that excel in diverse tasks, from software development life cycles to improving route suggestions for billions of users.

Microsoft also made significant strides in AI, complementing the advancements of NVIDIA and Google DeepMind, further contributing to AI's exponential growth.

Microsoft's efforts focused on optimizing foundation model performance, with innovations like LLMLingua for prompt compression and the development of more efficient AI models. They also announced the Microscaling Alliance, introducing the industry's first open data format for sub-8-bit training and inference in AI models. This initiative aims at enhancing the efficiency and scalability of deep learning

These developments in 2023 reflect not just gradual improvements but a substantial leap in the capabilities of AI systems, driven by significant advancements in computing power. This progression aligns with the overall trend of exponential growth in AI, as we witness more powerful and efficient systems capable of handling increasingly complex tasks and large-scale computations.

Finally, we can explore how 2023 saw a surge in research and human capital investment

In 2023, the surge in research and human capital investment in AI was a pivotal factor contributing to its exponential moment. This surge can be quantified by examining the volume of academic papers published, the increased number of AI-related job postings, and the substantial growth in corporate and academic investment in AI research and development.

AI Publications: There has been a significant increase in AI publications, indicating robust global research activity.

AI Job Postings: In the United States, AI-related job postings increased from 1.7% in 2021 to 1.9% in 2022, showing higher demand for AI skills.

AI PhD Graduates: A majority of new AI PhD graduates are now opting for industry jobs, illustrating the private sector's strong interest in AI talent.

Private Investment: Although there was a decrease in AI private investment in 2022 compared to 2021, the overall decade-long trend shows a substantial increase.

Furthermore, corporate and academic investments in AI have also seen substantial growth. Large technology companies and academic institutions have been pouring resources into AI research and development. This investment is not only financial but also involves dedicating human resources, such as researchers, engineers, and data scientists, to explore and innovate in AI. The AI Index Report includes data on corporate investment trends, mergers and acquisitions in the AI space, and the overall financial commitment to AI research, development, and implementation.

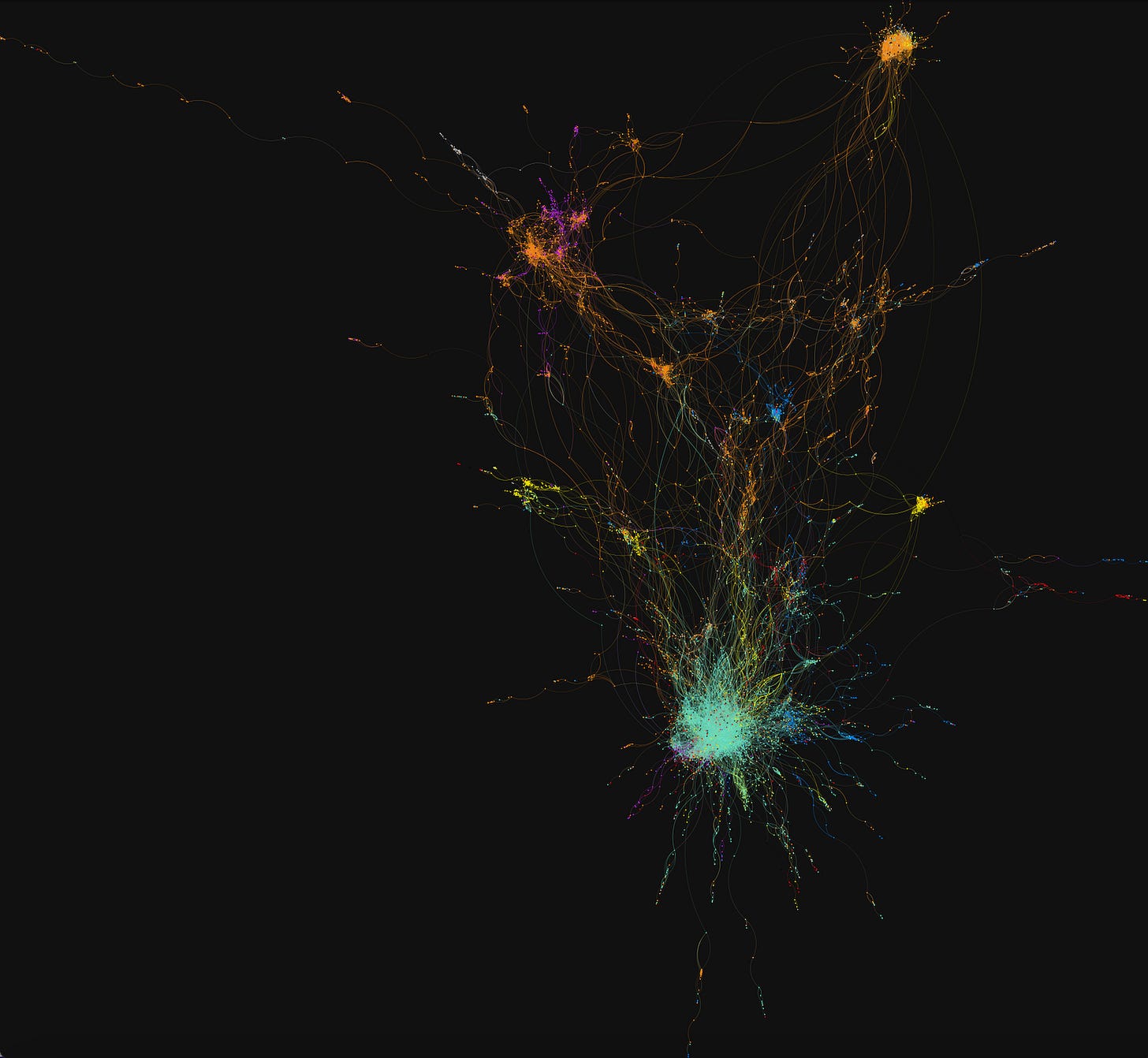

At AIDEN, the think tank that I am building as a comprehensive guide and coach for global entities to understand and navigate AI, we played around with publicly available data to identify trends and keywords. Specifically, we looked at papers published on arxiv and how similar the topics were. Our github repository can be found here, and stay tuned for the next round of visualizations that we publish.